AmLight supporting Network Research Exhibition (NRE) demonstrations at the Supercomputing Conference(SC24)

CI Compass is excited

This year at the SuperComputing 2024 (SC24) conference, taking place November 17–22 in Atlanta, GA, AmLight will play a role in supporting Network Research Exhibitions (NREs) that showcase cutting-edge advancements in global networking for data-intensive sciences. These demonstrations will highlight innovative methods to accelerate scientific discovery, enable international collaboration, and streamline the handling of massive data flows.

As the scale of scientific data continues to expand, so do the complexities of managing network infrastructures, scientific workflows, and globally distributed resources. The NREs at SC24 aim to tackle these challenges by demonstrating next-generation technologies, dynamic provisioning systems, and high-performance networking solutions designed to meet the needs of disciplines ranging from astrophysics to genomics. With contributions from research organizations spanning the Americas, Europe, Asia, Africa, and Oceania, SC24’s NREs will pave the way for smarter, faster, and more efficient global research and education networks.

Please feel free to join us at:

| Saturday, November 16 | Location | |

| 1:45 PM | Title: Resources supported by AMPATH/AmLight to enable ML/AI Presenter: Vasilka Chergarova | ART@SC24 Workshop at World Congress Convention Center, Room B206 |

| Monday, November 18 | Location | |

| 12.18 PM – 12.24PM | Title: SANReN’s 100Gbps Data Transfer Service: Transferring data fast! Presenter: Kasandra Pillay | INDIS |

| 2:00 PM – 2:20 PM | Title: Recent Linux Improvements that Impact TCP Throughput: Insights from R&E Networks Presenter: Marcos Schwarz | INDIS |

| 2:40 PM – 3:00 PM | Title: Leveraging In-band Network Telemetry for Automated DDoS Detection in Production Programmable Networks: The AmLight Use Case Presenter: Hadi Sahin | INDIS |

| Tuesday, November 19 | Location | |

| 11:20 AM -11:40 AM | Title: Status of FABRIC integration with the National Research Platform (NRP) by Frank Wuerthwein, UCSD; Presenter: Global P4 Lab by Marcos Schwarz, RNP | SCinet Theater 2049 |

| 11:30 AM | Title: AtlanticWave SDX 2.0: Dynamic Cross-Domain Network Orchestration and User-Friendly Provisioning for Major Facilities and R&E Networks Presenter: Julio Ibarra & Luis Marin Vera | RENCI booth 3923 |

| 2.40 PM – 3:00PM | Title: Ecosystems project – South Africa Presenter: Lara Timm | Illuminations Pavilion |

| 4:40 PM | Title: High-Speed data transfers from South Africa to USA! Presenter: Kasandra Pillay | SCinet Theater 2049 |

| Caltech booth 845 | ||

| Wednesday, November 20 | Location | |

| 5:40 PM – 6:00 PM | Title: Global P4 Lab Presenter: Marcos Schwarz | SCinet Theater 2049 |

| Thursday, November 21 | Location | |

| 11:30 AM – 12:00 PM | Title: Ecosystems project – South Africa Presenter: Lara Timm | Caltech booth 845 |

| 2:20 PM – 2:40 PM | Title: AtlanticWave-SDX 2.0: Improving network services for Major Facilities and R&E networks using Dynamic Orchestration and Service Provisioning Presenter: Jeronimo Bezerra | SCinet Theater 2049 |

This year the AmLight team is supporting several Network Research Executions (NREs):

SC24-NRE-013 PDF: A Next Generation Multi-Terabit/sec Campus and Global Network System for Data Intensive Sciences

FABRIC – Ciena, Booth #1940

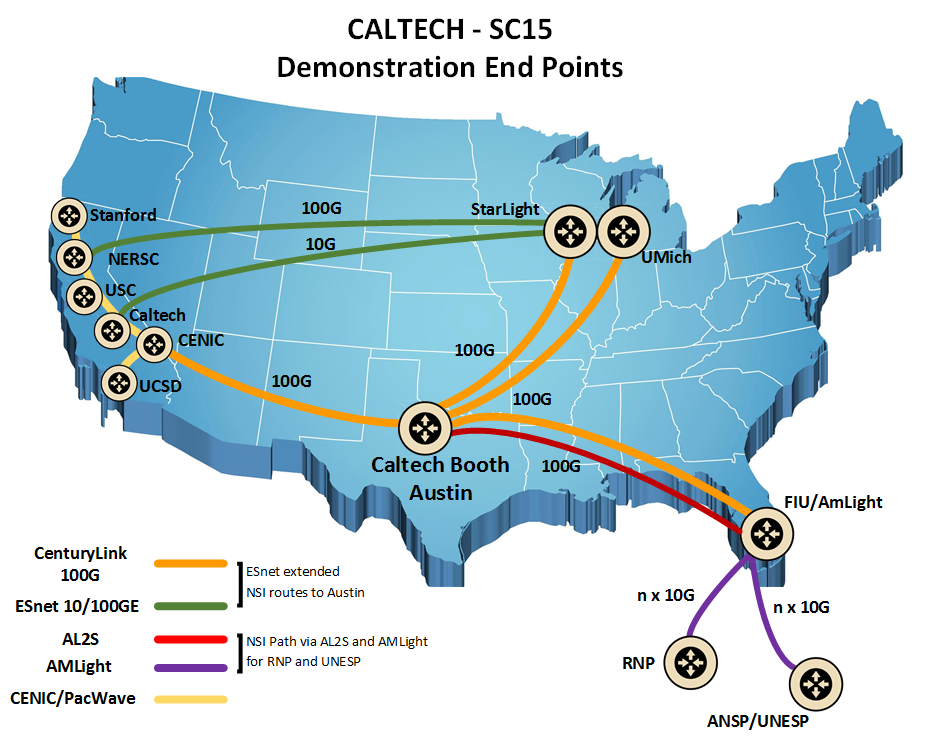

The Global Network Advancement Group (GNA-G) and its Data Intensive Sciences (DIS) and SENSE/AutoGOLE Working Groups, a worldwide collaboration bringing together major science programs, research and education networks, and advanced network R&D projects spanning the U.S, Europe, Asia, Latin America and Oceania, are developing a next generation network-integrated system that will meet the challenges of data intensive sciences, showing the way towards the next generation of intelligent operations of R&E networks, as well as new methods and modes of network operation and capacity management that will benefit both network research and production teams. The continued use of the Waveserver Ais discussed in this proposal will be a key enabler of these developments. This network research exhibition (NRE) will present and demonstrate, in partnership with several NREs covering specific areas, many aspects of the next generation system which the GNA-G is developing both for data intensive sciences and help set the course for future R&E network operations.

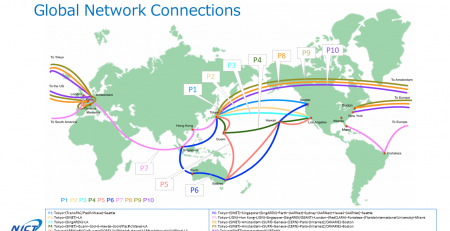

SC24-NRE-014 PDF : MMCFTP’s Data Transfer Experiment Using Ten 100 Gbps Lines Between JAPAN and USA

NICT, Booth #3155

Today, academic networks are interconnected through international lines of over 100 Gbps. By combining and using these lines simultaneously, we can achieve the faster data transfers needed for cutting-edge scientific and technological research. In this demonstration, Japan and the US will be connected via 10 x 100 Gbps lines, and memory-to-memory transfer experiments will be conducted using MMCFTP, a high-speed transfer tool that supports multipath transfers. We aim to achieve a peak speed of 800 Gbps.

SC24-NRE-015 PDF: High-Speed Data Transfers from South Africa to USA!

The AmLight network uses a hybrid network strategy that combines optical spectrum and leased capacity to build a reliable, leading-edge network infrastructure for research and education. AmLight supports high-performance network connectivity required by international science and engineering research and education collaborations involving the National Science Foundation (NSF) research community, with expansion to South America and West Africa. This collaboration offers a unique opportunity to work alongside SANReN, encompassing African involvement in the experiments while closely monitoring the performance of the AmLight-ExP’s SACS link through a diverse array of experiments arranged for SC24. The aim is to demonstrate SANReN 100Gbps Data Transfer Nodes(DTNs) which have been implemented to assist researchers with their large data transfer needs to the US and internationally. Large data transfers to the US usually make use of AmLight-ExP’s 100G link over SACS submarine cable system, which established a vital connection between South Africa and the United States via Brazil to Miami.

SC24-NRE-016 PDF: NA REX Demonstration, No.

NA REX is a collaboration directed at improving research and education networking and open exchange points in North America, and by extension globally, enabled by participating open international R&E exchange points, national R&E networks, and regional R&E networks. NA REX is being designed specifically to support R&E communities to accelerate science research, especially multi-domain science, data intensive science, and research testbeds. To accomplish this goal, the NA REX consortium is designing an international infrastructure based on 400 Gbps end-to-end paths interconnecting major open exchange points. Other service components include open exchange Data Transfer Nodes (DTNs), and AutoGOLE/NSI dynamic provisioning.

SC24-NRE-022 PDF: AutoGOLE/SENSE: Edge Site Resource Integration with Network Services

Department of Energy, Booth #3401

The GNA-G AutoGOLE/SENSE WG demonstrations will present the latest features and status for AutoGOLE and SENSE infrastructure and services. This showcase will include demonstrations of technologies, methods, and systems for dynamic Layer 2 and Layer 3 network multi-domain service provisioning. These services are focused on meeting the challenges for data intensive science programs and workflows, such as the Large Hadron Collider (LHC), the Vera Rubin Observatory, and challenges in many other disciplines. The services are designed to support multiple petabyte transactions across a global footprint, represented by a persistent testbed spanning the US, Europe, Asia Pacific and Latin American regions. The SC24 demonstrations will focus on new features in the area of programmatic driven domain science workflow integration and realtime monitoring/troubleshooting.

SC24-NRE-024 PDF: FABRIC

FABRIC – Ciena, Booth #1940, NO.

This demonstration will show the FABRIC Research Cyberinfrastructure in operation: https://portal.fabric-testbed.net

FABRIC (Adaptive ProgrammaBle Research Infrastructure for Computer Science and Science Applications) is an International infrastructure that enables cutting-edge experimentation and research at-scale in the areas of networking, cybersecurity, distributed computing, storage, virtual reality, 5G, machine learning, and science applications. The FABRIC infrastructure includes a distributed set of equipment at commercial collocation spaces, national labs and campuses. Each of the FABRIC sites has large amounts of compute and storage, interconnected by high speed, dedicated optical links. It also connects to specialized testbeds (5G/IoT PAWR, NSF Clouds), the Internet and high-performance computing facilities to create a rich environment for a wide variety of experimental activities.

SC24-NRE-025 PDF: HECATE Merges with PolKA AI-enabled Traffic Engineering for Data-intensive Science

California Institute of Technology, Booth #839

Traffic engineering and path computation techniques such as MPLS-TE (Multiprotocol Label Switching Traffic Engineering), Google’s B4, and Microsoft’s SWAN (Software Driven WAN) propose manners in which routers greedily select routing flow patterns globally, to increase path utilization. However, these techniques require meticulously designed heuristics to calculate optimal routes and also do not distinguish between arriving flow characteristics. Here, we are merging Hecate’s data-driven learning with the PolKA (Polynomial Key-based Architecture) Source Routing (SR) approach to translate data-driven flow control into an agile routing configuration for optimizing traffic engineering.

SC24-NRE-029 PDF: Multi Domain Experiments Using ESnet SENSE on the National Research Platform / PacWave / FABRIC

FABRIC – Ciena, Booth #1940, John Graham.

The San Diego Supercomputer Center (SDSC) and the Prototype National Research Platform (PNRP) NSF Award 2112167 Category II are providing twelve Xilinx Alveo U55C FPGAs in six servers for researchers at the SC24 Network Research Exhibition (NRE). These devices will be utilized in ESnet SmartNIC P4 Segment Routing over IPv6 (SRv6) using Unstructured Segment Identifier (uSID) experiments, connecting from the FABRIC testbed StarLight site (FABRIC-STAR) to various sites. Each U55C FPGA features dual 100 Gigabit Ethernet interfaces, integrated into the SDSC Automated Global Optical Lightpath Exchange (AutoGOLE) Science Environment for Network and Computer Engineering (SENSE) network topology. The PacWave NSF Award 2029306 (IRNC Core Improvement: Accelerating Scientific Discovery & Increasing Access – Enhancing & Extending the Pacific Wave Exchange Fabric) has supplied seven 1U DC-powered measurement and monitoring servers, known as Interactive Global Research Observatory Knowledgebase (IGROK) nodes. Each node is equipped with a Bluefield 2 Data Processing Unit (DPU), also with dual 100 Gigabit Ethernet interfaces, integrated into the PacWave AutoGOLE SENSE network topology.

SC24-NRE-030 PDF: GP4L – Global Platform For Lab, No.

This showcase will include demonstration of new deployed sites, specially the integration of BlueField2 nodes from seven Pacwave peering sites, the performance benchmark results from the new supported software dataplane based on freeRtr/DPDK and VPP. Demonstrate GP4L orchestration and automation capabilities, and additionally support other NREs that are experimenting with at scale advanced/novel networking protocols and use cases, like PolKA (Polynomial Key-based Architecture) a stateless source routing protocol based on arithmetic operations over a polynomial encoded route label.

SC24-NRE-031 PDF: High Performance Networking with the Sao Paulo Backbone SP Linking 8 Universities and the Bella Link

The research and education network at Sao Paulo (rednesp), formerly ANSP (Academic Network at Sao Paulo), connects dozens of research and education institutions in the State of Sao Paulo, Brazil also providing international connections to the USA and to Europe. After designing it in 2022, rednesp started deploying a new backbone, known as “Backbone SP” connecting 8 major research and education institutions with 100 Gbps links. The rednesp team will try to improve on last year’s bandwidth between Sao Paulo and the USA when 320 gbps (total bandwidth) was reached. At SC24, the target will be 400 gbps which shall be a record throughput connecting Brazil and the USA using academic networks. The team will also demonstrate technical characteristics such as the latency, bandwidth and jitter of the Backbone SP and its integration with international links. The usefulness of the network in supporting data intensive science applications in areas like high energy physics and cryo-electron microscopy shall be demonstrated. Also, 400 gbps equipment are being purchased and deployed and it should be possible to demonstrate connections with that speed in the state of São Paulo.

SC24-NRE-032 PDF: PolKA Routing Approach to Support Traffic Steering for Data-intensive Science

To handle terabit-per-second competing data flows across complex intercontinental networks for Data Intensive Science (DIS) research programs, the main goal of traffic steering is to optimize network resource use, enhance data transmissions’ performance, and ensure reliable and efficient network operation. These requirements are driven by the global distribution of DIS workflows and the demanding projections for the future. In this NRE, we present how PolKA (Polynomial Key-based Architecture) Source Routing (SR) offers Better, Faster, and Stronger functionalities to address the traffic steering challenges for DIS networks.

SC24-NRE-036 PDF: Streaming Event Horizon Telescope Data to MIT-Haystack, No

Indiana University, #1401, No.

The Event Horizon Telescope is an international collaboration of 11 Telescopes capturing images of black holes. The data captured is sent back to MIT-Haystack Observatory for processing. Currently this data is stored to disk and shipped to Haystack due to the volume of data captured. Each of these instruments have varying levels of connectivity to their regional, wide area, and international research and education (R&E) networks. This demonstration will show an increase in the speed to science by helping the EHT collaboration make better use of network resources available to transfer a subset of data to MIT-Haystack Observatory to confirm each site’s configuration before a full EHT run takes place.